Agree not to Disagree

About a year ago, I stumbled upon the GoEmotions dataset. It's a dataset that contains user texts from Reddit with emotion annotations. Google attached its name to the dataset and published a paper about how the dataset got created.

All of this effort might give you the impression that this is a dataset that's ready for a machine learning model. You'd be wrong, though. For about a year, GoEmotions has been my go-to example to explain you shouldn't blindly trust datasets. I've used it as an example in PyData talks, a recent NormConf talk a calmcode course, and a Prodigy tutorial. It even caused me to start an open-source project that tries to find doubtful examples in datasets.

GoEmotions has been a great motivation for me to dive into techniques that find mislabeled examples. But while these techniques are great, they don’t answer the question of why so many "bad" labels appear in the dataset in the first place. It's one thing to find bad annotations in ML, but it'd be better to learn how we might get good annotations instead.

So in this blog post, I’ll explore this by diving into the dataset some more.

What is in a dataset?

The GoEmotions dataset contains 211,225 annotations, supplied by 82 annotators, of 58,011 text examples found on Reddit. These are texts that a user typed as a reply in a conversation in one of the many subreddits. These texts are stripped from the conversation and given to a group of annotators. These annotators would see examples one-by-one in an interface that allowed them to attach emotion tags to a it. They also had the option of flagging an example as unclear. Each example had at least three annotators look at it. If an annotator felt that the example was unclear, then two more annotators would come in to give their opinion.

Annotators could select up to 27 emotion tags for these texts:

amusement, excitement, joy, love, desire, optimism, caring, pride, admiration, gratitude, relief, approval, realization, surprise, curiosity, confusion, fear, nervousness, remorse, embarrassment, disappointment, sadness, grief, disgust, anger, annoyance, disapproval

There is also a neutral option available. The paper also goes into a bit more depth on the definition of these emotions and explains how these labels correlate and how often the annotators agree.

??? quote "Extra details on the interaction of emotions."

<figure>

<img src="goemotions_correlation.webp" width="80%"/>

<figcaption>This figure is from the <a href="https://aclanthology.org/2020.acl-main.372/">GoEmotions paper</a>. Some emotions occur together more than others.</figcaption>

</figure>

??? quote "Some more worthwhile details about the dataset construction"

- An effort was made to filter the text upfront based on length. The NLTK tokenizer was used to select only texts that have 3-30 tokens.

- An effort was made to have annotators that are English natives. The paper does mention that all annotators live in India.

- An effort was made to remove subreddits that were not safe for work or that contained too many vulgar tokens (according to a predefined word list).

- An effort was made to balance different subreddits such that larger subreddits wouldn’t bias the dataset.

- An effort was made to remove subreddits that didn’t offer a variety of emotions.

- An effort was made to mask the names of people as well as references to religions.

Expectations

The GoEmotions dataset doesn’t just provide texts with annotations, it also provides us with documentation in the form of a paper and the annotator IDs. Most datasets don’t offer this at all and the annotator IDs are especially useful because they allow us to look at annotator agreement.

So how often do annotators agree?

Out of the 58,011 text examples, only 7,914 of them have each assigned annotator agree between all the emotions. That’s only 13.6% of all the examples.

This number might sound alarming. But when you think about the task, it’s also not that surprising. There are a lot of examples with a lot of emotion labels to pick from. Even if every example had 4 out of 5 annotators agree on the emotions, just the opinion of one person could nullify the agreement.

Still, the annotators clearly don't agree. So it'd be bad idea to blindly train a model on it.

But here's the thing.

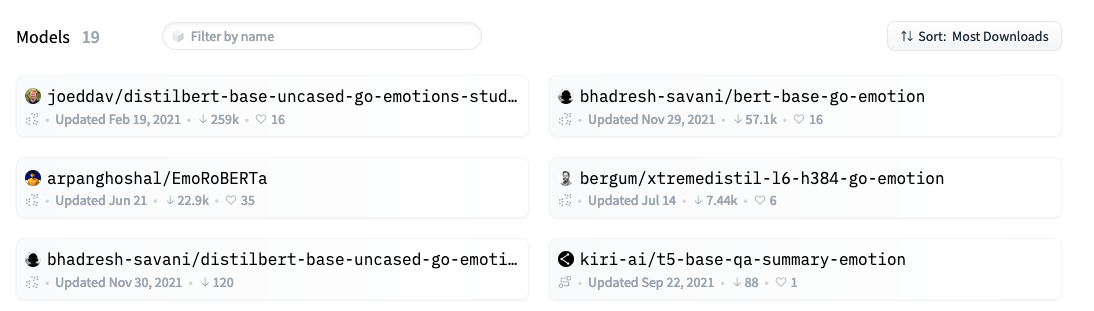

I can't blame anyone for assuming high quality that a dataset from Google. But it is a problem when you see just how many models were trained, and downloaded, on this dataset directly.

It's a tricky situation. I honestly think that the GoEmotions dataset is beautiful. It's so rare for a dataset to share the annotator information, and it's a great dataset to study because of it. But the dataset becomees ugly very quickly if you don't consider the annotator information.

I fear people forget that such a dataset exists because humans annotated it. And any model trained on it should respect the annotation process that happened before. You can't ignore this.

The real issue

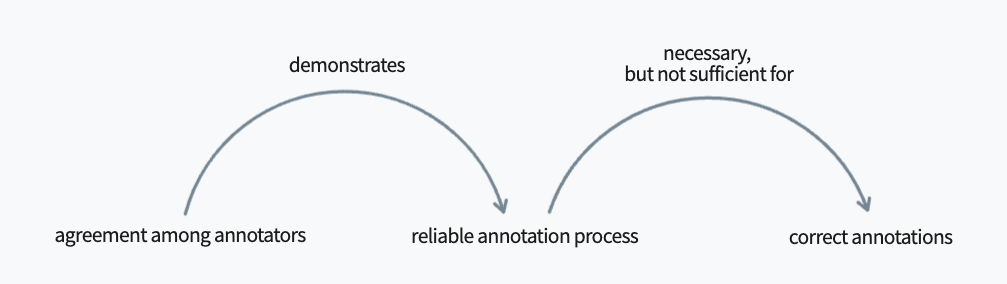

When annotators don’t agree we can’t assume correct annotations, but it’s the annotation process that needs to be addressed in order to fix that. By looking more rigourously at the process, we may be able to learn how to prevent the same issues from taking place in the future.

So what can we conclude here? What caused the annotators to disagree so often?

I think one part of the answer is that the task just invites lots of disagreement. For example; can we really expect a 50-year-old always to understand the internet slang on Reddit written by a 20-year-old? Agreeing on emotion in text requires a shared cultural understanding and Reddit is a forum with many subcommunities, each with its own jargon and in-jokes.

We should also remember that the texts are presented without context. This makes annotation of emotion incredibly hard because it's impossible to know if the text is meant to be taken literally or if we might be dealing with sarcasm. That means that each annotator has to guess sometimes.

Imagine you'd need to annotate this line:

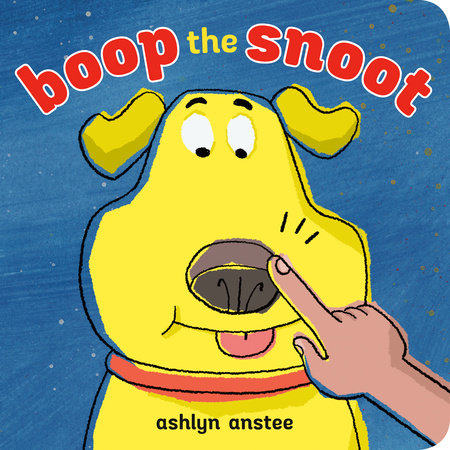

OMG THOSE TINY SHOES! desire to boop snoot intensifies

Which emotion would you pick? The annotators in the dataset picked a mixture of

desire, admiration, joy and suprise. But I can't blame you if you picked

approval , excitement or if you can't decide on an emotion. Many people

don't know what it means to

"boop a snoot".

??? quote "What 'boop a snoot' means." It means that you're gently touching your pets nose with an index finger as you make the sound "boop!"

Too many too quickly

At least three annotators saw each example in the dataset. But all these annotators come from a pool of 82 people and I think this is also part of the issue. It seems, but I could be wrong, that they immediately started with a large pool of annotators on Mechanical Turk which didn't leave any room to reflect and iterate.

Remember: different people are different. The paper behind the dataset mentioned that all of the annotators were native English speakers living in India. But even so, these are all different people with different life experiences trying to make sense of the emotion in Reddit text without having the context of the entire conversation around it. Interpretation of emotion is highly subjective, and we shouldn't expect everyone to agree immediately.

The dataset even gives us a few hints on how different people can experience these texts. For example, there are annotators like annotator #4 who only assign one or two emotions per example. Then again, there is annotator #31, who doesn't shy away from assigning seven emotions to a single example and even went as far as adding 12 emotions. With differences like that, it's no wonder there's very little agreement.

The problem persists if we only look at just a few annotators. The top three annotators are responsible for 14% of all the annotations, and just between these three, there is plenty of disagreement. There are 6820 overlapping examples between just these three annotators, of which only 3060 (~45%) are in full agreement!

If just these three annotators disagree so much, why even bother with the extra 79 annotators?

Issues in the Process

When you now take a step back and consider the annotation process, it's no surprise that so many annotators disagreed with each other. Just consider ...

1. There are just too many labels.

There are 28 different emotions to pick

from, and that's making it harder for annotators to maintain a consistent

definition of the labels. Not only that, but many of the labels are

subjective or are just too similar. Is fear really that different from

nervousness? What about love and caring? Do we really have a use case

where the difference between these two would matter?

2. There are too many annotators at the start.

You're bound to change your annotation process, especially when you're just getting started. Guidelines might change, and every annotator must be aware in order to keep the annotations consistent. But it's extremely difficult to keep 82 annotators aligned right from the start.

3. There is no clear application.

Even if we only had two labels and three annotators, we'd still be annotating without understanding the use case. Will these emotion labels be used for content moderation? If so, are we sure that these emotion labels are the most useful ones to have around? Do we also need to attach the entire Reddit conversation? What is the price of a bad annotation? These are all important questions to answer, and it's very hard to care about the right answer when you're so far away from the application.

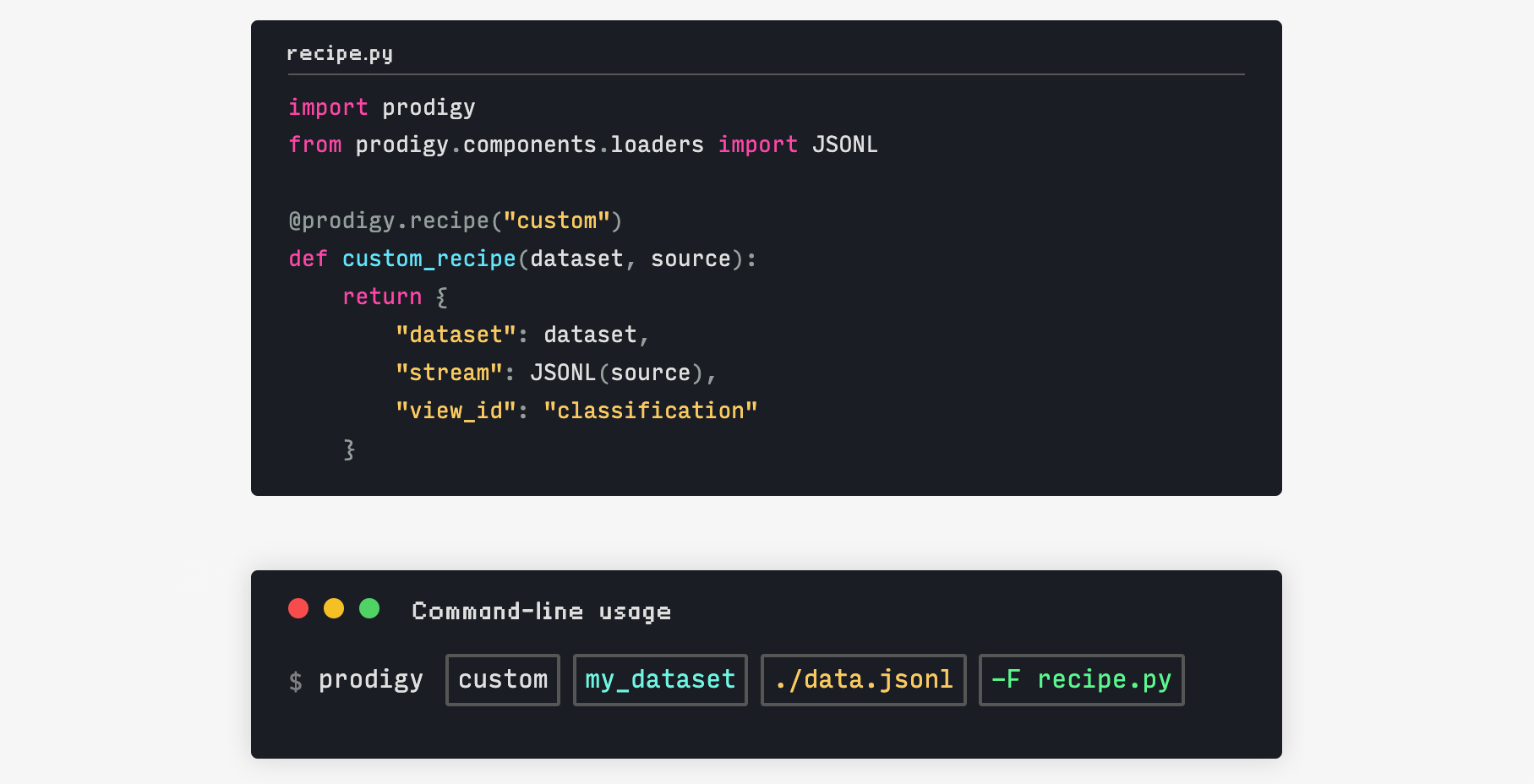

These pain points are very important to understand because they help inspire a better approach. You want to catch annotator disagreement early so that it can inspire improvements to the process. This is also what makes Prodigy a very cool tool. It was designed for iteration! It is fully scriptable in Python. Which means you can customise to your hearts content as you learn more and more about your problem.

You want to customize the approach as you learn about the problem you're solving. Especially early on, you want to be able to iterate on your annotation approach because you can't plan on what you'll find in your data once you start going through it.

A Better Approach

The annotation process becomes a lot easier when we limit ourselves to a smaller task. Creating a dataset for general emotion detection based on Reddit is very hard. There are too many subjective edge-cases and it may not translate well beyond Reddit. So instead, if we just focus on a small subset of emotions, one with a clear task in mind, it'll be a lot easier to bootstrap a useful dataset.

So let's imagine that we started much smaller. Let's say we're interested in content moderation and that we'd like to detect "anger" in text. Because we're only interested in a single label, we should be able to bootstrap an annotated dataset quite quickly. Imagine what you could learn with just two annotators, each annotating the same 1000 random examples.

- You might realize that you need the context of the conversation to rule out sarcasm. So you may want to update the annotation interface to allow for that.

- You might realize, based on a small sample of the dataset, that a trained model simple isn't accurate when it only sees a single utterance. So you realize early that you need to require more data to even feed the model.

- You might realize that "anger" on its own might not matter for moderation. One could rightfully be angry about something in the news, but it only becomes a problem if the user is "aggressive" to another person. So maybe an "aggressive" or "escalation" label makes more sense.

- You might realize that a lot of the Reddit data isn't relevant for your use case. You can go ahead and annotate everything, but you can also focus on a subset of the data. In the case of content moderation, we should probably only focus on subreddits that are similar to the forum that we're interested in moderating.

Nothing is stopping you from using another label, building a custom annotation interface, or switching to another subset of the data. But the improvement only comes when you're confronted with actual data and you're able to act on it. You can't dump a big text file on the internet and assume you can get useful training data just by waiting a month for other people to attach some labels. It can still be a good idea to expand the pool of annotators later, but only when there's a clear picture of what the annotation process should be.

You might not need a huge dataset either. Maybe a small dataset with precise and relevant labels gives you plenty of utility for your use-case. It's also much easier to iterate, reflect and improve the annotation process when you start small.

So when you start small, maybe you should celebrate.

!!! quote "Disclaimer" I currently work for Explosion, which is the company behind Prodigy. It's a great (!) tool. One that I've been using well before I joined the company, but folks should be aware that it's a paid tool and I now work for the company that makes it. Hence, a disclaimer.