Goodhart, Bad Metric

There are many ways to solve the wrong problem. A most iconic one is to fail to recognize Goodhart's law;

when a metric becomes a target to be optimized, it risks no longer being a useful metric

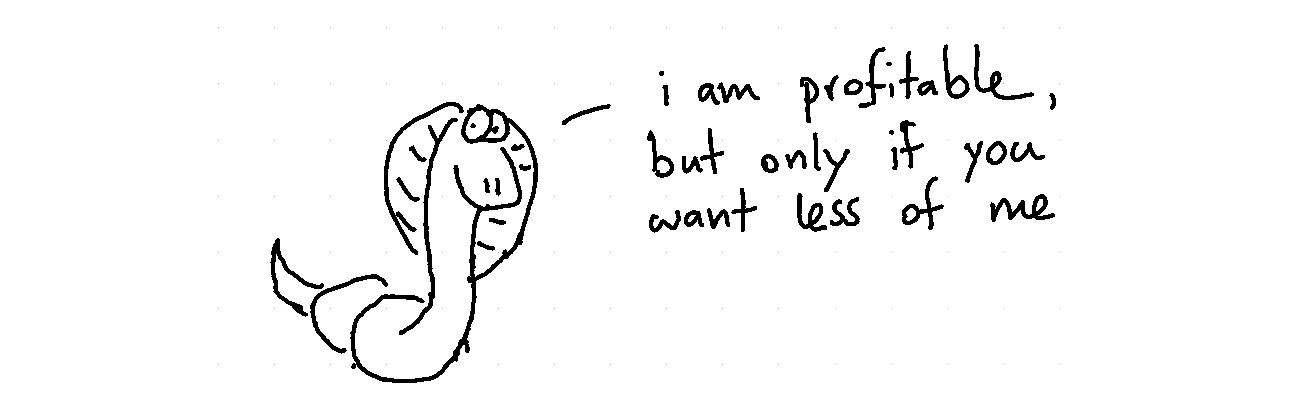

There are many anecdotes about this phenomenon on Wikipedia. The craziest one involves cobras during colonial India. The goal was to reduce the risk of getting poisoned by reducing the number of snakes.

As an incentive, the idea was to pay people to deliver dead cobras. This plan worked until people realized they could breed the snakes and make more money by doing so. Once the government realized this, they stopped paying for the cobras. This resulted in the corba breeders releasing the snakes into the wild. As a result, the program effectively increased the number of wild cobras. There's a similar story about rats during French colonial rule in Vietnam.

But it's not just the Wiki, the data-oriented enterprise is also getting a fair share of tales. I'll list a few fables that cannot be confirmed or denied.

Fable 1: Bonus Time

Back in the hey-day of data science, a large firm had an incentive program for their data scientists. If a scientist came up with an AB test that prooved statistically (1%) significant, then they would receive a hefty bonus. The goal was to promote data science and to incentivize great data scientists.

The irony was that this metric was easy to hack if you were just that. The clever ones immediately introduced 1000 random AB tests, and then the law of large numbers guaranteed their bonus for them. Once the bonus was "earned" the best thing to do is to leave the company (before anyone finds out what happened).

You'd be correct to assume that the bonus policy effectively caused the excellent data scientists (the ones that understood probability theory) to leave the company.

Fable 2: Suit Consultancy

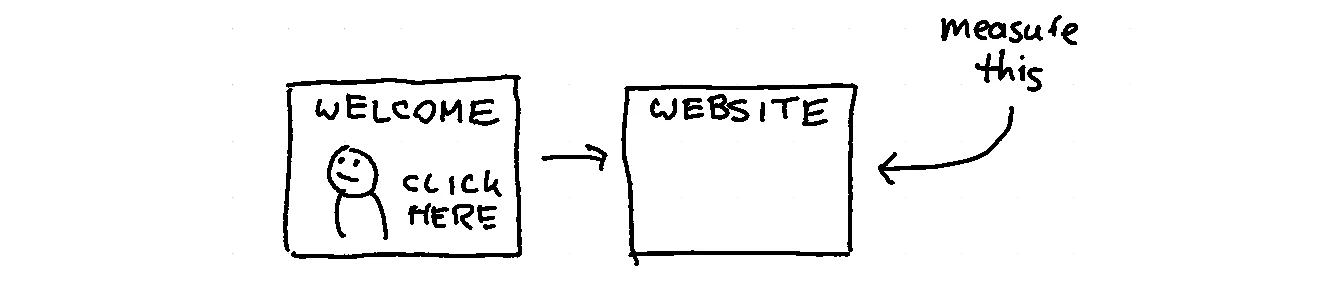

There was an article in Gartner that discussed the importance of bounce rate when you are optimising your website for new users. This inspired a consultancy, we'll call them SuitConsulting, to stress their client on the importance of this metric. They had meetings and made it clear to the analysts: they needed to find ways to increase the bounce rate.

A junior analytical consultant from SuitConsulting came with an idea: how about we add a flashy banner before we let the user on the main page. The idea being that this new banner would set the mood for th new user. A new version of the page was made and the analyst started looking at the bounce rate; it had indeed improved! SuitConsulting made a presentation out of this, presented it to upper management, got paid and left with a new whitepaper demonstrating their expertise in web analytics.

By the time management noticed a drop in new users and sales, SuitConsulting was already gone. They hadn't realised that by introducing the banner they were left with the heavy users allready familiar with the product. These users would not leave immediately but all the new users were turned off by requiring a new click.

Fable 3: Recommendations

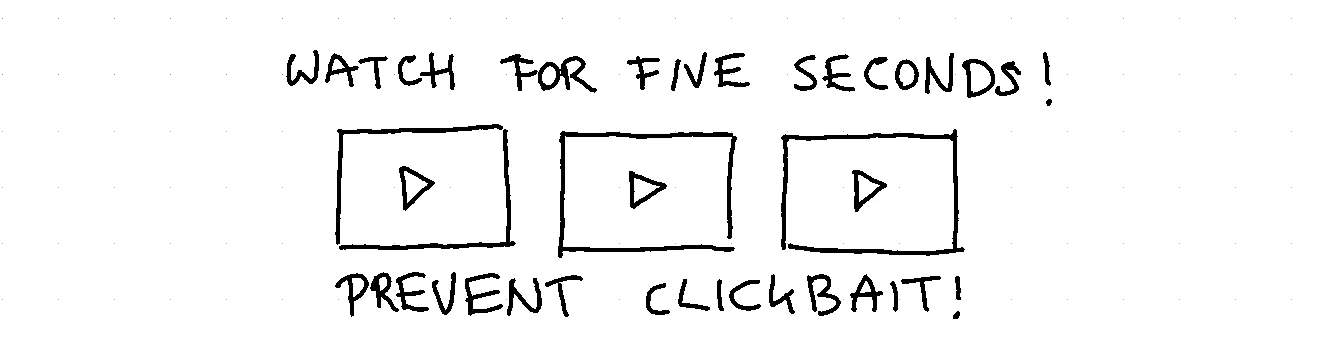

At a video-streaming service, management was concerned that the recommender engine would not serve recommendations that people were interested in. Rightly so, the goal of the recommender here was to bring content to the users that would broaden their horizon. The streaming service wanted to be careful that they did not perform any click baiting.

This made the science team consider. Maybe a different cost-function would help. Maybe the algorithm should only get a rewardif the recommended video actually got watched for at least 75% of its total length. As the first version of this algorithm was pushed, the algorithm started scoring really well on this metric.

A side effect of the algorithm was that the algorithm really started favoring videos that were easy to watch for at least 75% of its total length: 2-minute fragment videos. These videos were typically the videos that were designed to do click-baiting.

Patterns

The goal of a metric should be limited to being a proxy since they are easily perverted and misinterpreted. They should not replace common sense or judgment.

Metrics do make me wonder a bit about my own profession. When you do machine learning, even cross-validations, you typically optimize for a single parameter. Then I got reminded of an example that demonstrates why we should be worried.

Example

The dataset below involves chickens. The use-case is to predict the growth per chicken such that you can determine the best diet and also predict the weight of the chicken. It's a dataset that comes with the R language, but I've also made it available on scikit-lego. In the code below, you'll see it being used in a grid-search.

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.model_selection import GridSearchCV

from sklego.datasets import load_chicken

df = load_chicken(give_pandas=True)

X, y = df[['time', 'diet']], df['weight']

mod = GridSearchCV(estimator=GradientBoostingRegressor(),

iid=True,

cv=10,

n_jobs=-1,

param_grid={'n_estimators': [10, 50, 100],

'max_depth': [3, 5, 7]})

mod.fit(X, y)

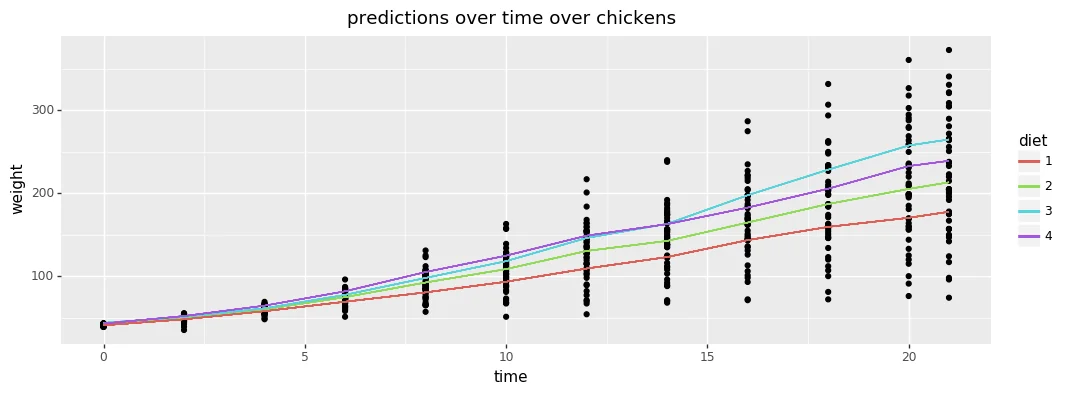

This model is cross-validated, and the predictions of the best model are shown below. We can see that there might be a preference for diets.

This model has been selected because it is the best at predicting the chickens' weight. We could even extend the grid-search by looking for more types of models. The problem is that it does not matter since we're dealing with a vanity metric. Let's consider the chart of all the growing paths for all the chickens.

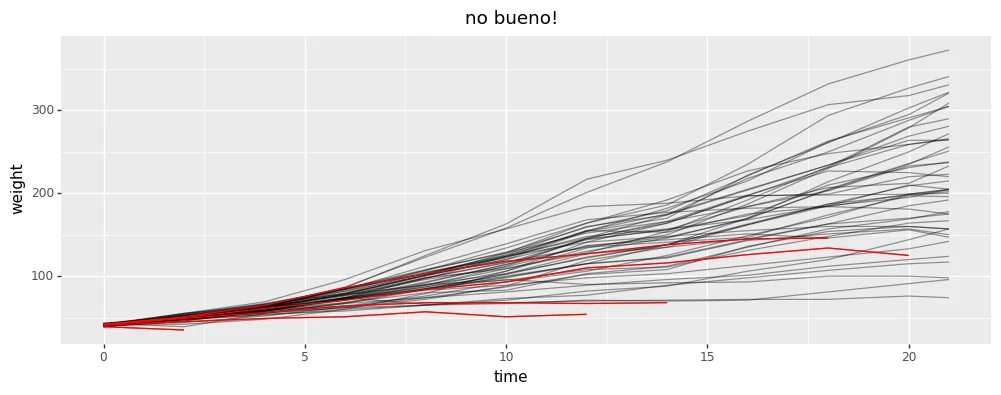

There's a couple of paths that indicate that some chickens died prematurely. If we recognize that our model has no way of capturing this, then we should also acknowledge how the grid-search is a dangerous distraction. It distracts us from understanding our data. It may even be the case that the diet with the best mean growth is also the diet with the most premature deaths.

Conclusion

There's danger in the act of machine learning.

You might make the argument that some of my examples merely demonstrate people putting faith in a faulty metric. There's sense in that. Not all metrics are super harmful. That said, it is the act of optimizing religiously that is a natural effect if you introduce a metric. This is what so dangerous about them.

Giving it to an algorithm will only make it worse. The algorithm will suggest that you need to focus on tuning instead of making you wonder if you're solving the right problem.

Let's be frank. If we're dealing with a vanity metric, then an algorithm sure is a great way to hide it. A grid search is not enough to protect you against this.