just is just amazing

Just is an alternative to make. It allows you to make files that look like this:

# Show current directory tree

tree depth="3":

find . -maxdepth {{depth}} -print | sed -e 's;[^/]*/;|____;g;s;____|; |;g'

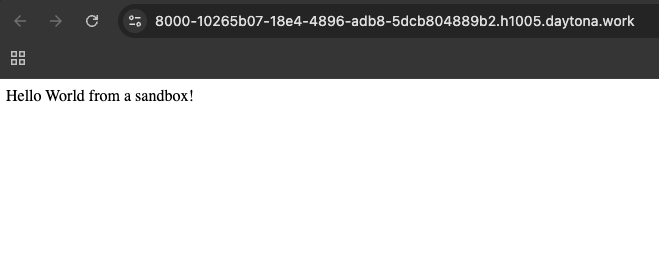

# Quick HTTP server on port 8000

serve port="8000":

python3 -m http.server {{port}}

And from here you would be able to run just tree to run the commands under it just like make. However, notice how easy it is to add arguments here? That means you can do just serve 8080 too! That's already a killer feature but there's a bunch more to like.

global alias

The real killer feature, for me, is the -g flag. That allows me refer to a central just file, which contains all of my common utils. From there, I can also add this alias to my .zshrc file.

# Global Just utilities alias

alias kmd='just -g'

This is amazing because it allows you to have one global just-file with all the utilities that I like to re-use across projects. So if there are commands that you would love to reuse, you now have a convenient place to put it.

Especially with tools like uv for Python that let you inline all the dependencies, you can really move a lot of utils in. Not only that, you can also store this just-file with all your scrips and put it on Github.

working directories

There are more fancy features to help this pattern.

I have this command to start a web server that contains my blogging editor. Normally I would have to cd into the right folder and then call make write but now I can just add this to my global justfile via the working-directory feature.

# Deploy the blog

[working-directory: '/Users/vincentwarmerdam/Development/blog']

blog-deploy:

make deploy

This lets me combine make commands that live on a project that I use a lot with a global file so that I can also run commands when I am not in the project folder.

From now on I can just type kmd write and kmd deploy to write from my blog and then to deploy it.

docs

It's also pretty fun to have an LLM add some fancy bash-fu.

# Extract various archive formats

extract file:

#!/usr/bin/env bash

if [ -f "{{file}}" ]; then

case "{{file}}" in

*.tar.bz2) tar xjf "{{file}}" ;;

*.tar.gz) tar xzf "{{file}}" ;;

*.tar.xz) tar xJf "{{file}}" ;;

*.tar) tar xf "{{file}}" ;;

*.zip) unzip "{{file}}" ;;

*.gz) gunzip "{{file}}" ;;

*.bz2) bunzip2 "{{file}}" ;;

*.rar) unrar x "{{file}}" ;;

*.7z) 7z x "{{file}}" ;;

*) echo "Don't know how to extract {{file}}" ;;

esac

else

echo "{{file}} is not a valid file"

fi

# Kill process by port

killport port:

lsof -ti:{{port}} | xargs kill -9

Notice how each command has some comments on top? Those are visible from the command line too! That means you'll also have inline documentation.

> just -g

Available recipes:

extract file # Extract various archive formats

killport port # Kill process by port

chains

You can also chain commands together, just like in make, but there is a twist.

# Stuff

stuff: clean

@echo "stuff"

# Clean

clean:

@echo "clean"

If you were to run just stuff it would also run clean but you can also do this inline. So if you had this file:

# Stuff

stuff:

@echo "stuff"

# Clean

clean:

@echo "clean"

Then you could also do this:

just clean stuff

Each command will only run once so you can't get multiple runs via: just clean clean clean but you can specify if some commands need to run before/after.

a:

echo 'A!'

b: a && c d

echo 'B!'

c:

echo 'C!'

d:

echo 'D!'

Running just b will show:

echo 'A!'

A!

echo 'B!'

B!

echo 'C!'

C!

echo 'D!'

D!

You can also choose to run just recusrively by calling just within a defition so expect a lot of freedom here.

just scratching the surface

just feels like one of those rare moments where you're exposed to a new tool and within half an hour you are already more productive.

I'm really only scratching the surface with what you can do with this tool, so make sure you check the documentation.